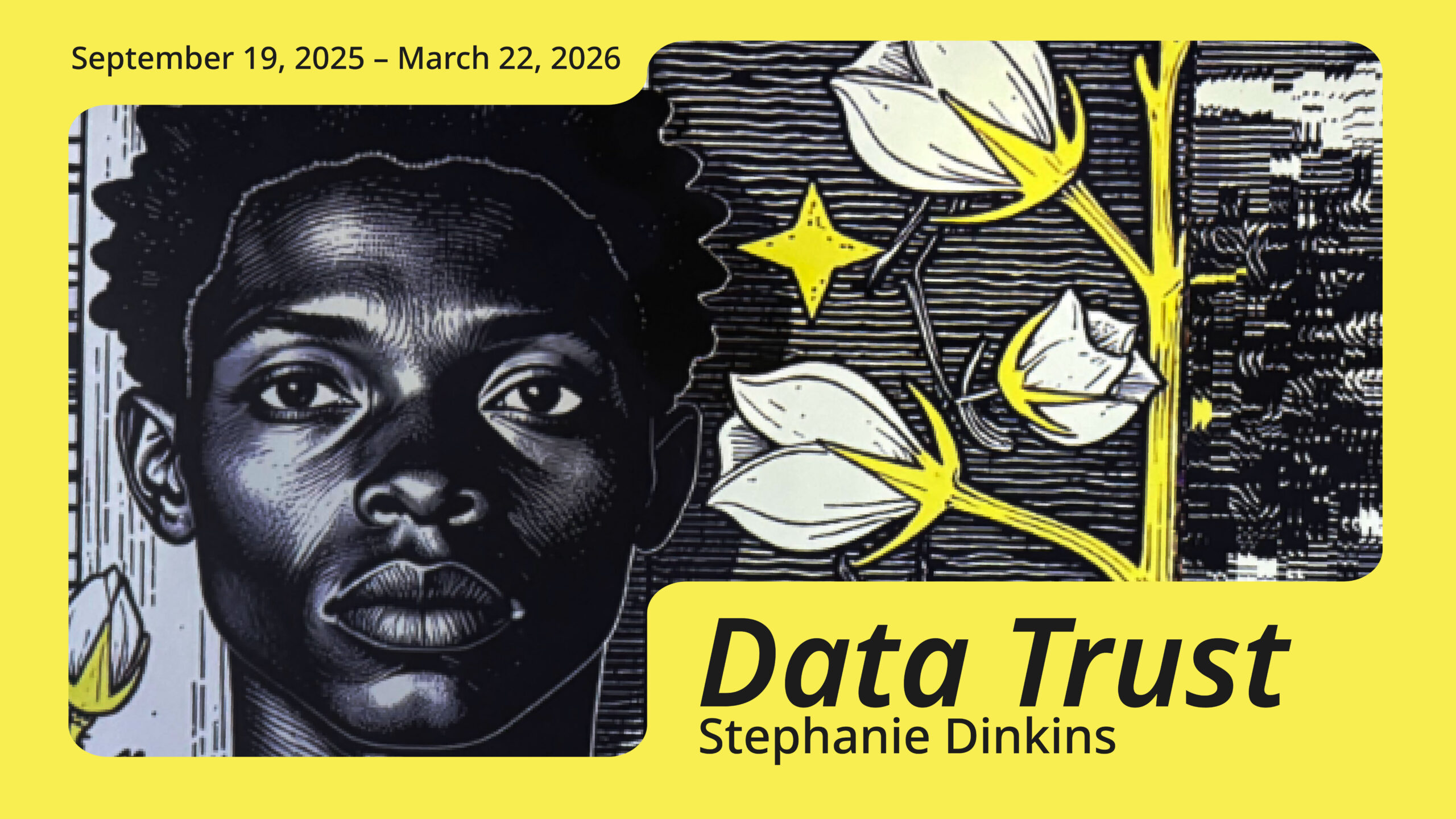

Wondering how stories, data, DNA, and AI come together in Stephanie Dinkins’ exhibition Data Trust? In the FAQs below, Dinkins shares how the project centers equity, care, and invites audiences to explore the relationships between personal, technological, and ecological forms of information.

Data Trust is an immersive art exhibition by artist and technologist Stephanie Dinkins. The exhibition is on view at ICA San José from September 19, 2025 to March 22, 2026.

Frequently Asked Questions

Why are you collecting stories from the public?

We’re gathering self-determined stories as a way to build datasets from the ground up—datasets rooted in care, specificity, and the multitudes of human experience. These aren’t just stories. They’re interventions—acts of refusal against systems that flatten, erase, or misrepresent us. We believe the people most impacted by technology should also shape its foundations. If we don’t, who will?

How will my story be used?

Your contribution becomes part of a data commons—created by and for the public—that helps train experimental AI models made with values of care, transparency, and community input. The system doesn’t just consume your story; it reflects it back to you through generative visuals and shared dialogue. None of this is for commercial use. It’s for collective insight and critical play.

Do I have to be an expert in AI to participate?

Absolutely not. We believe everyone has expertise—on their lives, cultures, fears, and futures. This work is designed for individuals who’ve never thought about AI, are skeptical of it, or are deeply curious. Your presence here is the expertise. Together, we demystify the system by touching it, talking to it, resisting it, and remaking it.

How does this make AI more equitable?

AI is not neutral. It inherits and amplifies the histories and hierarchies encoded into its training data. By contributing stories that speak from the margins, the silenced, the overlooked—we challenge that foundation. This isn’t about fixing AI by adding “diverse voices.” It’s about reimagining the systems entirely, from the data up, with community consent and care at the center. This is a living model for integrating justice into our technologies.

What kinds of stories are you looking for?

We’re not looking for perfect narratives—we’re looking for truths. Stories that speak to how you move through the world, what you fear, what you love, what you hope for. The unpolished, the fragmented, the poetic and the practical. Every offering contributes to a more nuanced collective archive of being.

What will you do with my data?

We handle all contributions with reverence and radical care. Your story—your data—is anonymized, randomized, and stripped of identifying information before it enters the system. We don’t extract; we reciprocate. We don’t collect; we co-create. You’re invited to share only what you feel good about releasing to the world, knowing it will help challenge the dominant stories machines are learning to tell.

What’s the experience like?

The exhibition is a container—for reflection, creativity, and resistance, equal parts sculpture, studio, and salon housed in an upcycled shipping container collaboratively created by Dinkins and LOT-EK. Here, you can share your story, see how AI interprets it, and ask deeper questions about what this technology reflects and what it leaves out.

Conversations made through the phones—whether with another visitor or the AI—are automatically transcribed and added anonymously to the Data Trust dataset. These exchanges become part of the collective archive, extending the project’s living memory. No names or personal identifiers are attached, only the words themselves—fragments of dialogue that help shape and challenge the AI ecosystem we are building together.

Isn’t AI harmful to the environment? How does this project address that?

Yes. The energy costs of large-scale AI systems are real and urgent. That’s why this project resists the logic of “scale at all costs.” Our models are bespoke, intentionally small, and experimental. We choose care over speed, local over global, and nuance over dominance. Environmental justice is inseparable from technological justice—and both must start with community, not capital.